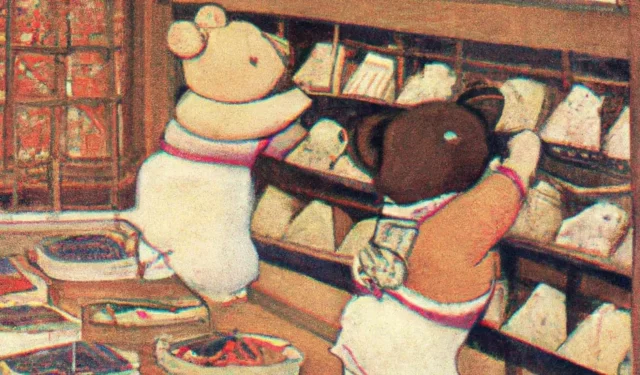

The OpenAI DALL-E 2 artificial intelligence creates cartoon images based on a text description from your imagination.

In January 2021, the OpenAI consortium, founded by Elon Musk and funded by Microsoft, unveiled its most ambitious project to date, the DALL-E machine learning system. This ingenious multi-modal artificial intelligence was able to generate cartoon-style images based only on user-specified keywords. More recently, the consortium introduced a new version of DALL-E with higher resolution and lower latency than the previous one.

Artificial intelligence OpenAI DALL-E 2 creates cartoon images

The first version of DALL-E (short for Dalie and Wall-E) could generate images and combine multiple images into a collage, suggest different angles, and even guess a number of image elements – such as shadow effects – from a simple written description.

“Unlike a 3D renderer, whose input must be specified unambiguously down to the smallest detail, DALL-E can often ‘fill in the gaps’ when the title implies that the image contains certain details not explicitly stated,”the team explained. Open AI in 2021.

DALL-E was never intended to be a commercial product, so its capabilities were limited as the OpenAI team only considered it as a research tool. It was also necessary to avoid the situation that Microsoft had with its Tay chatbot and that the system could not generate false information. For this second version, the goals remain the same, and a watermark has been added to the image to clearly indicate that it was created by artificial intelligence. In addition, the system now prohibits users from creating images with proper names.

according to a textual description taken from your imagination

DALL-E 2, which uses the OpenAI CLIP image recognition system, uses its image generation capabilities. Users can now select and edit specific areas of existing images, add or remove elements along with their shadows, merge two images into one collage, and create variations of an existing image. The generated images are now 1024px squares, while the original avatars were 256px. CLIP was designed to summarize the content of an image in a way that can be understood by a human. The consortium reversed the process by creating an image from his description.

“DALL-E 1 took our GPT-3 approach to language and applied it to create an image: we compress images into a set of words and learned to predict what would happen next,” researcher Prafulla Dhariwal explained to The Rod.

Unlike the first version, which everyone could play with on the OpenAI site, this version 2 is currently only available to certain partners, and they themselves are limited in what they can do. Only a lucky few get to use it. They also cannot export images created on a third party platform, although OpenAI plans to make DALL-E 2’s new features available via an API in the future. If you still want to try the system, you can join the waiting list.