Amazon is figuring out how to make Alexa’s voice assistant deeply fake the voice of anyone, dead or alive, with just a short recording. The company showcased the feature at its re:Mars conference in Las Vegas on Wednesday, using the emotional trauma from the ongoing pandemic and grief to generate interest.

Amazon’s Re:Mars focuses on artificial intelligence, machine learning, robotics and other emerging technologies, with tech experts and industry leaders taking the stage. During the second day’s keynote, Rohit Prasad, Senior Vice President and Chief Scientist of Alexa AI at Amazon, showed off a feature currently being developed for Alexa.

In the demonstration, a child asks Alexa, “Can Grandma finish reading The Wizard of Oz for me?”Alexa replies, “Okay,”in her typical effeminate robotic voice. But then the voice of the child’s grandmother is heard from the speaker, who is reading a fairy tale by L. Frank Baum.

You can watch a demo below:

Prasad only said that Amazon is “working”on the Alexa capability, and did not elaborate on what work remains and when or if it will be available.

However, he provided the smallest technical details.

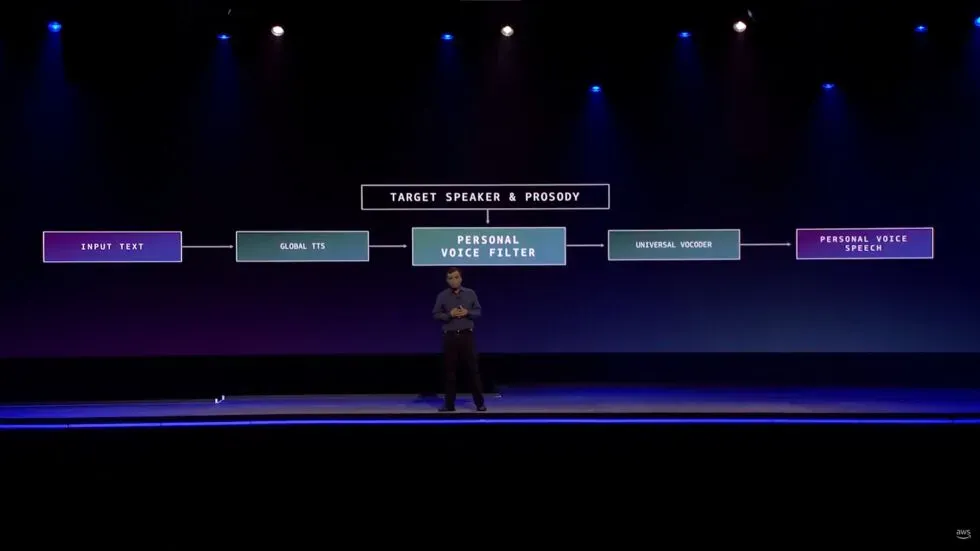

“It required invention when we had to learn how to reproduce high quality voice in less than a minute of recording compared to hours of recording in the studio,” he said. “We made this possible by framing the problem as a voice conversion problem rather than a speech generation problem.”

Of course, deepfakes of all types generally have a mixed reputation. However, some attempts have been made to use the technology as a tool rather than a vehicle for creepiness.

Audio deepfakes, in particular, as The Verge notes, have been used in the media to make up for when, say, a podcaster messed up a line or when a project’s star died suddenly, as happened with Anthony Bourdain’s Roadrunner documentary.

The publication notes that there are even cases of people using AI to create chatbots that communicate as if they were lost loved ones.

Alexa won’t even be the first consumer product to use deepfake audio to replace a family member who can’t be present in person. Smart speaker Takara Tomy, as Gi z modo points out, uses artificial intelligence to read bedtime stories to children in the voice of a parent. Parents are reported to upload their voices by reading the script for about 15 minutes, so to speak. Though this differs markedly from Amazon’s demo in that the product owner chooses to provide their own vocals rather than the product using the voice of someone who probably can’t give their permission.

Aside from concerns about deepfakes being used for scams, robberies, and other nefarious activities, there are already some disturbing things about how Amazon is building this feature, which doesn’t even have a release date yet.

Before showing the demo, Prasad talked about how Alexa gives users a “camaraderie relationship.”

“In this camaraderie role, the human qualities of empathy and affect are key to building trust,” said the CEO. “These attributes have become even more important during the ongoing pandemic, when many of us have lost someone we love. While the AI cannot eliminate this pain of loss, it can definitely extend their memory.”

Prasad added that the feature “provides a lasting personal relationship.”

It is true that countless people are seriously seeking human “empathy and affect”in response to the emotional stress caused by the COVID-19 pandemic. However, Amazon’s artificial intelligence voice assistant is not up to the task of meeting these human needs. Alexa also cannot provide “long-term personal relationships”with people who are no longer with us.

It’s not hard to believe that there are good intentions behind this evolving feature and that hearing the voice of the person you miss can be a great comfort. Theoretically, we could even have fun with such a feature. Making Alexa make a friend sound like they said something stupid is harmless. And, as we said above, there are other companies using deepfake technology in ways similar to what Amazon demonstrated.

But presenting the evolving ability of Alexa as a way to reconnect with deceased family members is a giant, unrealistic, and problematic leap. Meanwhile, touching the strings of the heart, causing grief and loneliness associated with the pandemic, seems to be futile. There are places Amazon doesn’t belong, and grief counseling is one of them.