Amazon is trying to make Alexa simpler and more intuitive to use through the use of a new large language model (LLM). During its annual hardware event Wednesday, Amazon demoed the generative-AI-powered Alexa that users can soon preview on Echo devices. But in all its talk of new features and a generative-AI-fueled future, Amazon barely acknowledged the longstanding elephant in the room: privacy.

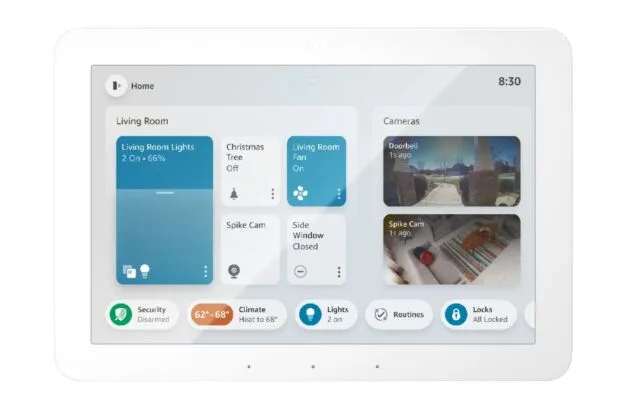

Amazon’s devices event featured a new Echo Show 8, updated Ring devices, and new Fire TV sticks. But most interesting was a look at how the company is trying to navigate generative AI hype and the uncertainty around the future of voice assistants. Amazon said users will be able to start previewing Alexa’s new features via any Echo device, including the original, in a few weeks.

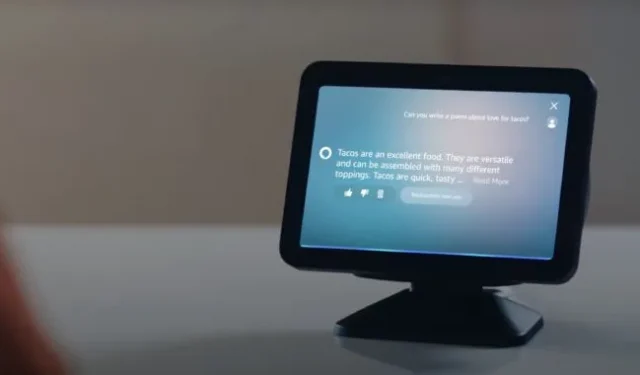

Alexa’s added features are enabled by a new LLM that Amazon says was fine-tuned for voice conversations and that uses algorithms for body language and intonation recognition. The company was clear that Alexa will focus on generative AI going forward. But the new features are their in early stages, Amazon noted, so bumps, bugs, and errors are expected at first.

“Alexa, can I finally stop saying Alexa?”

One of the most immediately impactful changes is that Alexa can now listen without the user needing to say “Alexa”first. A device will be able to use its camera, a users’ pre-created visual ID, and a previous setup with Alexa to determine when someone is speaking to it.

In a demo, David Limp, SVP of devices and services at Amazon, was able to have a conversation with Alexa, step away for a moment, and then return to the device for further conversation without saying “Alexa”again.

“This is made possible by on-device visual processing and acoustic models working in concert trying to determine whether you’re addressing the device or someone else in the room,”Rohit Prasad, SVP and head AI scientist at Amazon, explained on-stage at Amazon’s event.

Amazon is also working to reduce Alexa’s time-to-response and to allow users to pause or use fillers like “um”without breaking the interaction. Prasan said Alexa can do this by using a massive conformer model with billions of parameters.

All this points to an Alexa that listens and watches with more intent than ever. But Amazon’s presentation didn’t detail any new privacy or security capabilities to make sure this new power isn’t used maliciously or in a way that users don’t agree with.

When reached for comment, an Amazon spokesperson noted previously established Alexa privacy features, including an indicator light on Echo devices that indicates Alexa is listening and letting users review and manage voice history.

The spokesperson added that Amazon uses “numerous tactics and features”to secure devices and customer data, including “rigorous security reviews during development, encryption to protect data, and regular software security updates”and that Amazon will “continue to take steps to further harden”device security.

Looking to reduce the tedium of controlling multiple smart home devices, Amazon is also updating Alexa to simplify smart home commands. For example, Alexa could eventually know how to “turn on the new light in the living room,”even if you don’t know the official “name”of that smart bulb. Amazon also discussed the ability to control multiple devices and set up routines with a single sentence rather than having to say “Alexa”and a device name for each instruction. Other developments include getting Alexa to infer what you want, such as revving up the robovac when you tell it that the house is dirty. Such features, if reliable, would make voice control over smart devices significantly more appealing and intuitive. The features will be previewable “in the coming months,”Amazon said.