The “Generative Fill” feature, which employs cloud-based image synthesis to fill specific parts of an image with new AI-generated information based on a text description, was added by Adobe to the Photoshop beta on Tuesday. Generative Fill, which uses Adobe Firefly, operates similarly to the “inpainting” method that has been employed in DALL-E and Stable Diffusion updates since last year.

Adobe Firefly, a unique image-synthesis model created by Adobe, is at the heart of Generative Fill. Firefly is a deep learning AI model that has been trained on millions of stock photographs from Adobe to link specific imagery to text descriptions about it. People can now type in what they wish to see (for example, “a clown on a computer display”) and Firefly will provide a number of choices for them to select from. Generative Fill develops a context-aware generation that can seamlessly incorporate synthetic imagery into an existing image using a well-known AI approach termed “inpainting.”

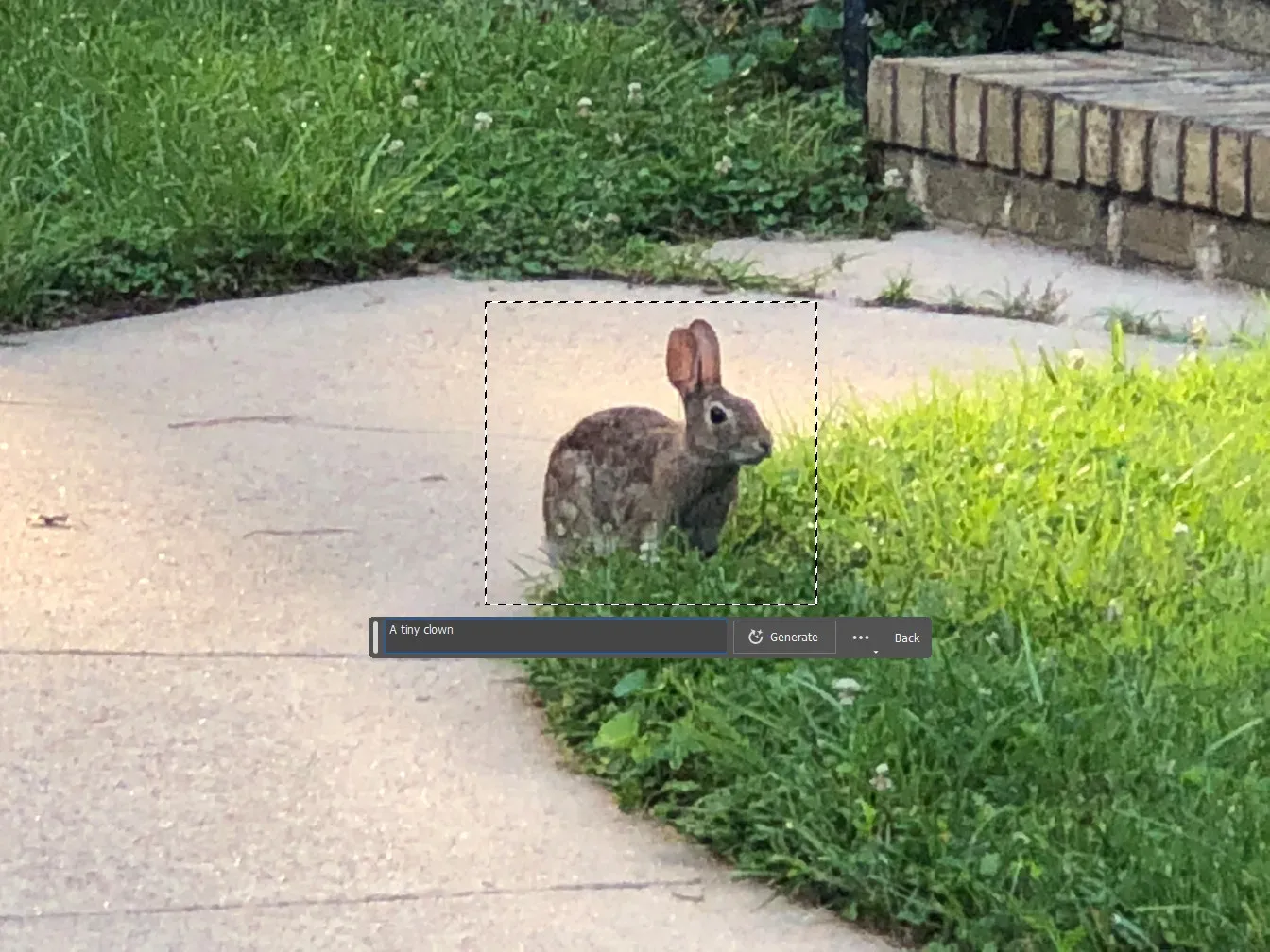

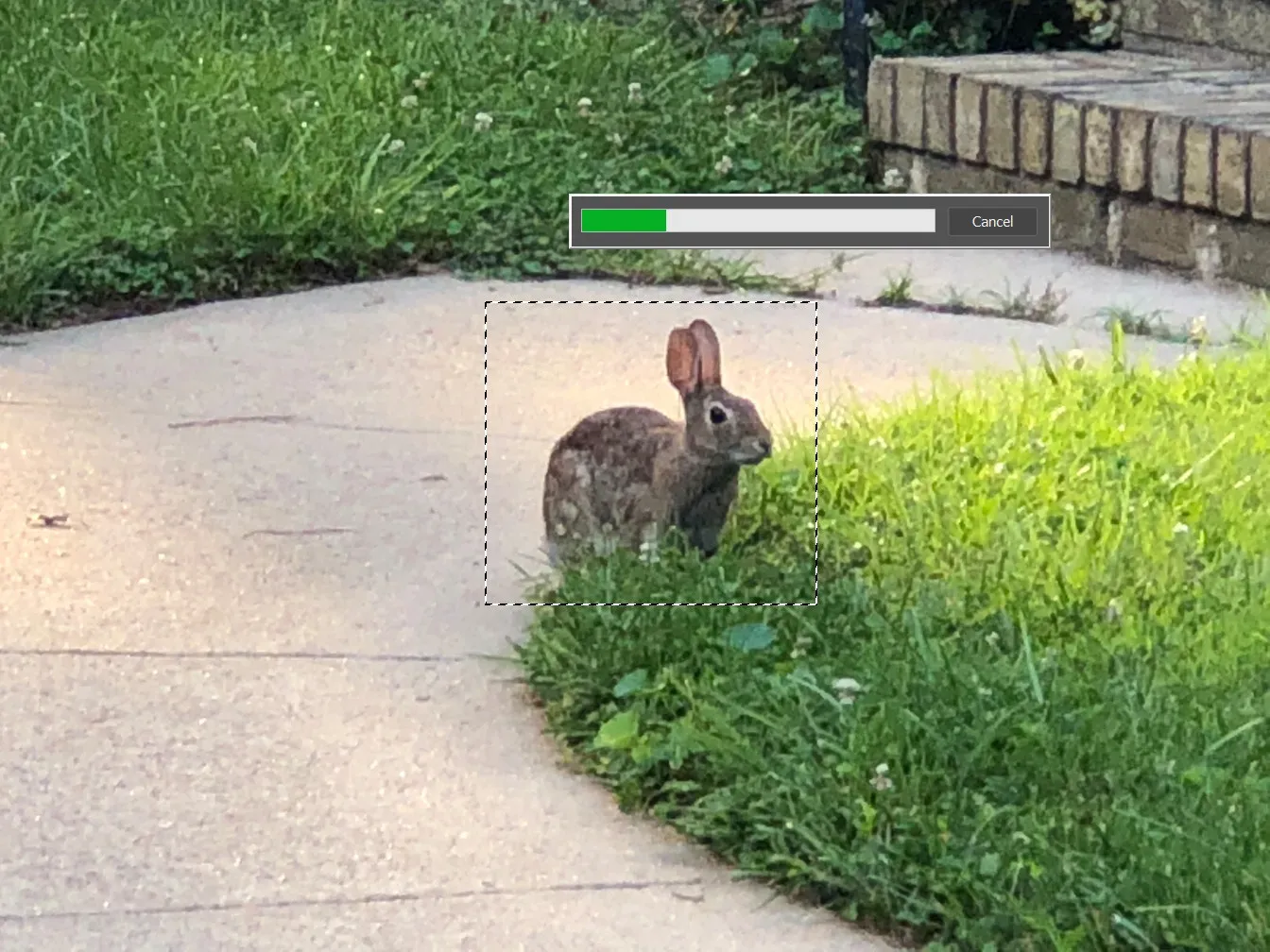

Users choose the area of an existing image they want to change before using Generative Fill. A “Contextual Task Bar” that allows users to enter a description of what they wish to see generated in the selected region appears once they have chosen it. After processing this data on Adobe’s servers, Photoshop returns the results to the application. After generating, the user has the choice of selecting from a number of generations’ worth of possibilities or creating new options to peruse.

The usage of the Generative Fill tool results in the creation of a new “Generative Layer,” which enables non-destructive changes to the image content, such as additions, extensions, or removals, guided by these text instructions. It automatically modifies to the image’s viewpoint, lighting, and aesthetic.

The Photoshop beta now includes additional AI-powered features in addition to Generative Fill. Moreover, Firefly has made it possible for Photoshop to completely erase elements from a scene or to expand the size of an image by creating new content around the original, a process known as “outpainting” in artificial intelligence.

Adobe is just now catching up to adding these features to its flagship picture editor, even though OpenAI’s DALL-E 2 image generator and editor have had them since August of last year (and various homebrew releases of Stable Diffusion since approximately the same time). It’s true that the turnaround time is quick for a business that may already have a huge liability target painted on its back due to concerns about the creation of harmful or socially stigmatized content, the use of artists’ images as training materials, and the powering of propaganda or disinformation.

Along similar lines, Adobe relies on terms of use that prevent consumers from creating “abusive, unlawful, or confidential”content in addition to blocking the generation of some copyrighted, violent, and sexual keywords.

Additionally, Adobe is stepping up its Content Authenticity Initiative, which uses Content Credentials to add metadata to generated files that help track their provenance. With Generative Fill’s ability to easily warp the apparent media reality of a photo (admittedly something Photoshop has been doing since its inception), Adobe is able to easily alter the appearance of a photo’s reality.

Any Creative Cloud users who have a Photoshop subscription or free trial can presently access Generative Fill in the Photoshop beta app by going to the “Beta apps” area of the Creative Cloud app. It does not yet support Chinese users, is not accessible to anyone under the age of 18, and only offers text instructions in English at this time. By the end of the year, Adobe intends to make Generative Fill accessible to all Photoshop users so that anybody can quickly create yard clowns.

With an Adobe login and a web-based tool, you may also try Generative Fill for free if you don’t have a Creative Cloud subscription on the Adobe Firefly website. The Firefly beta waitlist was just withdrawn by Adobe.