Microsoft explains itself to justify the sometimes strange behavior of the Bing chatbot. The reason for this, according to the responsible team, is the long exchange sessions with AI.

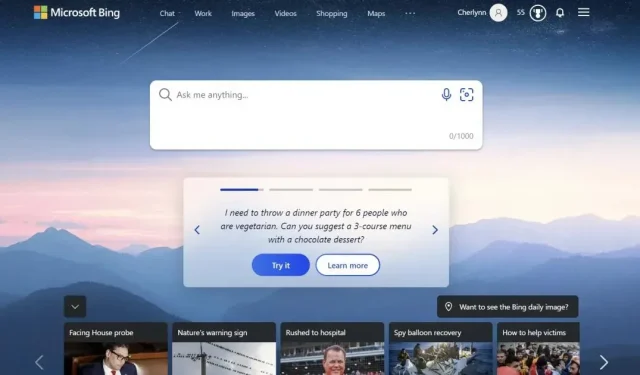

Last week, Microsoft launched its AI-based chatbot for Bing in its Edge browser, which has been in the news ever since. But not always for the right reasons. First impressions were good, very good, but users began to notice that the Bing bot gave out incorrect information, scolded users for wasting time, and even showed rather “unbalanced” behavior. In a strange conversation, he refused to release the tapes for Avatar 2: Waterway, insisting that the film hasn’t come out yet because it’s still 2022. The bot even called the user “unintelligent and stubborn”, by the way, when he tried to tell Bing that he was wrong.

Microsoft explains itself to justify the sometimes strange behavior of the Bing chatbot

Microsoft published a blog post today explaining what happened and how it is dealing with such issues. First, the Redmond-based company admitted that it didn’t plan to use Bing’s AI for “general world discovery and social entertainment.”

These “long chat sessions of 15 or more questions”can bring the system down. “Bing can be repetitive or fast/nudge to provide answers that aren’t necessarily helpful or in line with the tone we want,” the company explains. Apparently, this is because the bot can “forget”question after question it was originally trying to answer. To fix this, Microsoft may add a tool to reset the search context or start from scratch.

Another issue is more complex and interesting: “The model sometimes tries to answer or think in the same tone as the questions were asked, which can sometimes lead to undesirable style,”writes Microsoft. This requires a lot of interaction, but the engineers think they can fix it by giving users more control.

The reason for this, according to the responsible team, is the long exchange sessions with AI.

Despite these concerns, testers are generally very pleased with Bing’s AI results for search, according to Microsoft. However, it is possible to do better on “highly time-bound data, such as real-time sports scores.”The American giant also seeks to improve factual answers, for example, for financial statements by multiplying the data by a factor of four. Finally, the company will also “add a button to give you more control to encourage accuracy or creativity in the response, depending on your request.”

Bing Support would like to thank users for all the testing they’ve done, which “helps make the product better for everyone.”At the same time, engineers say they are surprised that users stay up to two hours to interact with the robot. Undoubtedly, Internet users will have to test each new iteration of the chatbot all day, everything can be very interesting.

The Bing subreddit has quite a few examples of the new Bing chat getting out of control.

Open chat in search now might be a bad idea!

Taken here as a reminder that there was a time when a major search engine showed this in their results. pic.twitter.com/LiE2HJCV2z

— Vlad (@vladquant) February 13, 2023