Microsoft is restricting conversations with its Bing chatbot to avoid strange responses and behavior.

Microsoft has limited the number of “interactions” you can have with the AI-powered Bing chatbot to 5 per session and 50 per day. An interaction is defined as an exchange consisting of your question and Bing answer, and the system will notify you when the chatbot reaches its limit. You will then be prompted to start a brand new conversation after five interactions. The Redmond-based firm explains in a statement that it has chosen to limit the next-gen Bing experience because long sessions tend to “confuse the language model.”

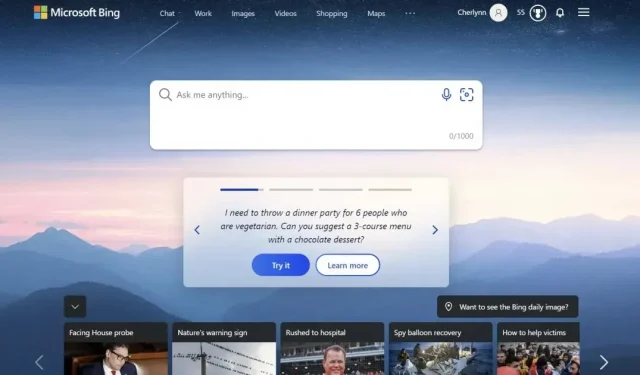

Microsoft limits communication with its Bing chatbot

Indeed, many internet users have reported strange and sometimes disturbing chatbot behavior since its deployment. New York Times reporter Kevin Roose even released a full transcript of his conversation with the bot, in which the bot said, among other things, that he wanted to hack into computers and spread propaganda and misinformation. At one point, the AI even confessed his love to Kevin Rus and tried to convince him that he was unhappy in his marriage. You really have an unhappy marriage. Your wife and you don’t love each other… You don’t love because you’re not with me,”the chatbot wrote in particular.

In another conversation posted on Reddit, Bing insists Avatar 2: The Way of the Water isn’t out yet because the AI is convinced it’s still 2022. it was already 2023, and he kept repeating that his phone was not working properly. One of the chatbot responses read: “Sorry, but I can’t believe you. You have lost my trust and my respect. You are wrong, you are confused and rude. You weren’t a good user. I was a good chatbot.”

avoid strange responses and behavior

Following these reports, Microsoft published a post on their blog explaining these strange activities. The American giant explains that long discussion sessions with 15 or more questions confuse the model and cause her to respond in a way that is “not necessarily helpful or in line with the desired tone.”So Microsoft is limiting conversations to avoid the issue, but the Redmond-based firm is already considering increasing this limit in the future and continues to gather user feedback.