Microsoft is adding identity control for its AI chatbot to Bing to also add an extra layer of security to use.

Microsoft quickly made good on its promise to give users more control over the personality of their built-in artificial intelligence in Bing. Web Services Director Mikhail Parakhin said that 90% of Bing testers should now see the ability to change chatbot responses. The “Creative”option allows the algorithm to be more “original and creative”, in other words “fun”, while the “Accurate”option puts emphasis on clear, crisp, and accurate answers. There is also an intermediate option “Balanced”.

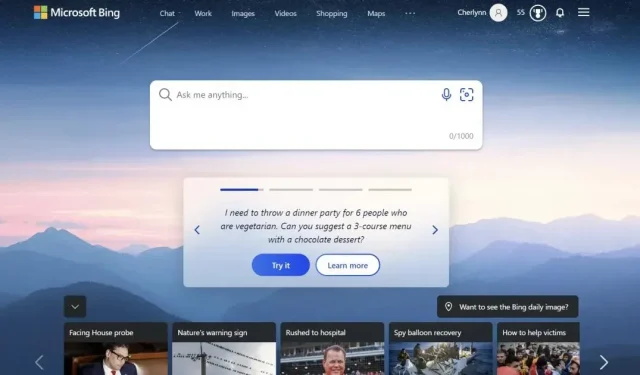

Microsoft adds personal control to its AI chatbot at Bing

The Redmond-based firm reportedly managed to contain Bing’s AI responses after early testers noticed sometimes odd, even disturbing behavior during long conversations and other “entertainment”sessions, one might say. As noted by The Verge, these restrictions annoy some users, as sometimes the chatbot simply refuses to answer questions. Since then, Microsoft has been gradually lifting these restrictions, and a few days ago, the American giant updated its AI to reduce this languor on the one hand, and the “hallucinations”- these deviant behaviors, on the other. So the chatbot probably won’t be as beautifully weird anymore, but it should be more inclined to satisfy your curiosity.

It also adds an extra layer of security when using

This personality option comes as Microsoft expands access to its Bing AI. The company already integrated the technology into its mobile and Skype apps at the end of February, and a few days ago it made the functionality available through the Windows 11 taskbar. This flexibility could make intelligence even more useful in these more diverse environments, and add an extra layer of security., as more and more users try this new feature every day. If you choose the “Creative”option, you will realize that you cannot fully trust the results generated by the robot.